Tensorflow Recipe¶

The Tensorflow Recipe is a Qt QML project that you can use to test the principles around the object detection. We have tried to make it the minimum codebase you need in order to display a video stream together with the Google Coral and Tensorflow Lite based object detection. It is designed around the model SSDLite MobileDet, ssdlite_mobiledet_coco_qat_postprocess_edgetpu.tflite, and you can download it and its label file from the web page of Google Coral. The recipe should however work with most models that return rectangles in the result. The model that the Tensorflow Recipe is designed for is able to detect 90 different objects with classification and position as result.

You can use this guide as a tutorial to build up the project or just to look up parts of the code that you would like to take a closer look at.

We recommend to run the Tensorflow Recipe in EGLFS mode.

In order to run the recipe on the display, you need the Linux image with the Google Coral drivers and installing the ai-runtime.ipk package.

Project and Source Code¶

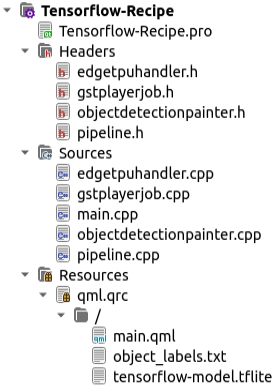

Start by creating a Qt QML project for the V1000 or V1200 display along with the classes shown in figure 1.

Figure 1. The Tensorflow Recipe project.

The tensorflow-model.tflite and object_labels.txt are embedded as resources and these are the Tensorflow Lite model and its label file.

The recipe is created for the model SSDLite MobileDet, ssdlite_mobiledet_coco_qat_postprocess_edgetpu.tflite, and you can download it and the label file from the web page of Google Coral. It should however work with any Tensorflow Lite model that returns rectangles in the result.

In the project file, we need to add references to the header files and libraries that are needed for GStreamer, Tensorflow, OpenCV and Edge TPU.

CONFIG += c++11 link_pkgconfig

PKGCONFIG += gstreamer-1.0

INCLUDEPATH += /opt/crosscontrol/include

INCLUDEPATH += /opt/crosscontrol/include/tensorflow

INCLUDEPATH += /opt/crosscontrol/include/tensorflow/lite/tools/make/downloads/flatbuffers/include

INCLUDEPATH += /opt/crosscontrol/arm/imx8/opencv/include/opencv4

LIBS += -lpthread -ldl -lrt

LIBS += -L/opt/crosscontrol/arm/imx8/lib -ledgetpu -ltensorflow-lite

LIBS += -L/opt/crosscontrol/arm/imx8/opencv/lib -lopencv_core \

-lopencv_imgproc \

-lopencv_imgcodecs \

-lopencv_videoio \

-lopencv_flann \

-lopencv_features2d \

-lopencv_calib3d \

-lopencv_highgui \

-lopencv_objdetect

...

main.cpp¶

The main cpp file contains the main(..) function where the application is created and custom classes are setup. The two interesting parts are the ones where the callback function for the probe of the pipeline is defined and assigned to the pipeline and the scheduleRenderJob(..) that creates a thread for the gstreamer pipeline. You will also find the videoItem that is the QML component that displays the video stream by being assigned to the qmlglsink element of the GStreamer pipeline.

The gst_pad_probe_callback(..) is a callback function for the so called probe of the pipeline. By using a probe, it is possible to fetch single frames from the video stream pipeline and forward them to the object detection feature.

/******************************************************************************

* Include Files

******************************************************************************/

#include <QGuiApplication>

#include <QCommandLineParser>

#include <QQmlApplicationEngine>

#include <QQmlContext>

#include <QQuickWindow>

#include <QQuickItem>

#include <gst/gst.h>

#include "gstplayerjob.h"

#include "edgetpuhandler.h"

#include "objectdetectionpainter.h"

#include "pipeline.h"

/******************************************************************************

* Manifest Constants, Macros

******************************************************************************/

GstPadProbeReturn gst_pad_probe_callback(GstPad *pad, GstPadProbeInfo *info, gpointer user_data)

{

Q_UNUSED(user_data)

auto edge = EdgeTPUHandler::getSingleton();

if(edge != nullptr)

{

if(edge->isRunningDetection()){

return GST_PAD_PROBE_OK;

}

}

GstMapInfo map;

GstBuffer *buffer;

buffer = GST_PAD_PROBE_INFO_BUFFER(info);

if (buffer == NULL)

return GST_PAD_PROBE_OK;

if (gst_buffer_map(buffer, &map, GST_MAP_READ))

{

GstCaps *caps = gst_pad_get_current_caps(pad);

GstStructure *str = gst_caps_get_structure(caps, 0);

gint height = 0;

gst_structure_get_int(str, "height", &height);

gint width = 0;

gst_structure_get_int(str, "width", &width);

// Most video streams are in the I420 format, or YV12 which is a variant of this

// It has the luminance plane Y first, then the V chroma plane and last the U chroma plane

// It requires 12 bits per pixel: YYYYYYYY VV UU, so the byte matrix needs to be 12/8=1.5 times the height

cv::Mat original = cv::Mat(1.5 * height, width, CV_8UC1, (char*)map.data);

cv::Mat matImage(height, width, CV_8UC3);

// The model used requires a 3-channel RGB image, so we convert it to that

cv::cvtColor(original, matImage, cv::COLOR_YUV2RGB_YV12, 3);

edge->startDectection(matImage);

gst_caps_unref(caps);

gst_buffer_unmap(buffer, &map);

}

return GST_PAD_PROBE_OK;

}

int main(int argc, char *argv[]) {

int ret;

gst_init(&argc, &argv);

{

QGuiApplication app(argc, argv);

QCommandLineParser parser;

QCommandLineOption portOption(QStringList() << "p"

<< "port", "portnumber to receive video (default=50004)","interval", "50004");

parser.addOption(portOption);

parser.addHelpOption();

parser.process(app);

const int portNumber = parser.value(portOption).toInt();

EdgeTPUHandler edge;

qmlRegisterType<ObjectDetectionPainter>("CrossControl", 1, 0, "ObjectDetectionPainter");

Pipeline* pipeline = Pipeline::createPipeline("video-pipeline", 1, portNumber);

if(pipeline == nullptr){

qCritical() << "Failed to create pipeline";

exit(EXIT_FAILURE);

}

pipeline->setProbeCallback(gst_pad_probe_callback);

QQmlApplicationEngine engine;

// Set edgeTPUHandler as a context property so we can access it from qml

engine.rootContext()->setContextProperty("edgeTPUHandler", &edge);

engine.load(QUrl(QStringLiteral("qrc:/main.qml")));

QQuickWindow* rootObject = static_cast<QQuickWindow *>(engine.rootObjects().first());

// find the videoItem

QQuickItem* videoItem = rootObject->findChild<QQuickItem *>("videoItem");

g_assert(videoItem);

// Set the sink to the videoItem

g_object_set(pipeline->getSink(), "widget", videoItem, NULL);

rootObject->scheduleRenderJob(new GstPlayerJob(pipeline->getGstPipeline()), QQuickWindow::BeforeSynchronizingStage);

ret = app.exec();

delete pipeline;

}

gst_deinit();

return ret;

}

edgetpuhandler.cpp¶

The EdgeTPUHandler is the object detection “engine” that handles the access to the Coral accelerator, takes care of adapting and processing the image as well as running the inference and taking care of the result. When one detection has been completed, a signal is emitted to indicate that a result is ready to be drawn on screen.

Implementation¶

/******************************************************************************

* Include Files

******************************************************************************/

#include "edgetpuhandler.h"

#include <QDebug>

#include <QString>

#include "tensorflow/lite/builtin_op_data.h"

#include "tensorflow/lite/kernels/register.h"

/******************************************************************************

* Class Methods

******************************************************************************/

EdgeTPUHandler* singleton = nullptr;

EdgeTPUHandler::EdgeTPUHandler(QObject *parent) : QObject(parent)

{

singleton = this;

initEngine();

readDetectionLabels();

}

EdgeTPUHandler* EdgeTPUHandler::getSingleton()

{

return singleton;

}

void EdgeTPUHandler::initEngine()

{

m_edgeTpuContext = edgetpu::EdgeTpuManager::GetSingleton()->OpenDevice();

Q_ASSERT(m_edgeTpuContext != nullptr);

QFile file(m_modelPath);

if(!file.open(QIODevice::ReadOnly)){

qCritical() << "Failed to read model file";

exit(EXIT_FAILURE);

}

m_modelFile = file.readAll();

m_model = tflite::FlatBufferModel::BuildFromBuffer(m_modelFile.data(), m_modelFile.size());

if(m_model == nullptr){

qCritical() << "Failed to build model";

exit(EXIT_FAILURE);

}

tflite::ops::builtin::BuiltinOpResolver resolver;

resolver.AddCustom(edgetpu::kCustomOp, edgetpu::RegisterCustomOp());

if (tflite::InterpreterBuilder(*m_model, resolver)(&m_interpreter) != kTfLiteOk)

{

qCritical() << "Failed to build Interpreter\n";

exit(EXIT_FAILURE);

}

m_interpreter->SetExternalContext(kTfLiteEdgeTpuContext, m_edgeTpuContext.get());

m_interpreter->SetNumThreads(1);

m_interpreter->AllocateTensors();

const auto *dims = m_interpreter->tensor(m_interpreter->inputs()[0])->dims;

m_wantedHeight = dims->data[1];

m_wantedWidth = dims->data[2];

m_wantedChannels = dims->data[3];

const auto &outputs = m_interpreter->outputs();

for (size_t i = 0; i < outputs.size(); i++)

{

const auto *tensor = m_interpreter->tensor(outputs[i]);

m_outputShape.append(tensor->bytes / sizeof(float));

}

QObject::connect(&m_watcher, &QFutureWatcher<std::vector<ObjectDetectionRectangle>>::finished, this, &EdgeTPUHandler::finalizeDetection);

}

std::vector<ObjectDetectionRectangle> EdgeTPUHandler::processFrame(cv::Mat &frame)

{

const int frameWidth = frame.cols;

const int frameHeight = frame.rows;

cv::resize(frame, frame, cv::Size(m_wantedWidth, m_wantedHeight));

cv::Mat flat = frame.reshape(1, frame.total() * frame.channels());

const std::vector<uint8_t> input = frame.isContinuous() ? flat : flat.clone();

const auto &rawResults = runInference(input);

const float threshold = 0.5;

const auto &detectionResult = objectEstimateWithOutputVector(rawResults, threshold);

setInputHeight(frameHeight);

setInputWidth(frameWidth);

return detectionResult;

}

bool EdgeTPUHandler::isRunningDetection() const

{

return m_isRunningDetection;

}

void EdgeTPUHandler::startDectection(cv::Mat frame)

{

if(m_isRunningDetection){

return;

}

m_isRunningDetection = true;

QFuture<std::vector<ObjectDetectionRectangle>> future;

future = QtConcurrent::run([=](cv::Mat img) {

return processFrame(img);

}, frame);

m_watcher.setFuture(future);

}

void EdgeTPUHandler::finalizeDetection()

{

m_candidates = m_watcher.result();

m_isRunningDetection = false;

emit detectionDone();

}

const std::vector<ObjectDetectionRectangle>& EdgeTPUHandler::getCandidates()

{

return m_candidates;

}

int EdgeTPUHandler::inputWidth() const

{

return m_inputWidth;

}

int EdgeTPUHandler::inputHeight() const

{

return m_inputHeight;

}

void EdgeTPUHandler::setInputWidth(int inputWidth)

{

if (m_inputWidth == inputWidth)

return;

m_inputWidth = inputWidth;

emit inputWidthChanged(m_inputWidth);

}

void EdgeTPUHandler::setInputHeight(int inputHeight)

{

if (m_inputHeight == inputHeight)

return;

m_inputHeight = inputHeight;

emit inputHeightChanged(m_inputHeight);

}

std::vector<float> EdgeTPUHandler::runInference(const std::vector<uint8_t> &inputData)

{

std::vector<float> outputData;

auto *input = m_interpreter->typed_input_tensor<uint8_t>(0);

std::memcpy(input, inputData.data(), inputData.size());

m_interpreter->Invoke();

const auto &outputs = m_interpreter->outputs();

int out_idx = 0;

for (size_t i = 0; i < outputs.size(); ++i)

{

const auto *outTensor = m_interpreter->tensor(outputs[i]);

assert(outTensor != nullptr);

if (outTensor->type == kTfLiteUInt8)

{

const int numValues = outTensor->bytes;

outputData.resize(out_idx + numValues);

const uint8_t *output = m_interpreter->typed_output_tensor<uint8_t>(i);

for (int j = 0; j < numValues; ++j)

{

outputData[out_idx++] = (output[j] - outTensor->params.zero_point) * outTensor->params.scale;

}

}

else if (outTensor->type == kTfLiteFloat32)

{

const int numValues = outTensor->bytes / sizeof(float);

outputData.resize(out_idx + numValues);

const float *output = m_interpreter->typed_output_tensor<float>(i);

for (int j = 0; j < numValues; ++j)

{

outputData[out_idx++] = output[j];

}

}

else

{

qCritical() << "Tensor " << outTensor->name << " has unsupported output type: " << outTensor->type;

}

}

return outputData;

}

std::vector<ObjectDetectionRectangle> EdgeTPUHandler::objectEstimateWithOutputVector(const std::vector<float> &infs, const float threshold)

{

const auto *result_raw = infs.data();

std::vector<std::vector<float>> results(m_outputShape.size());

int offset = 0;

// Split up the vectors into the parts, according to m_output_shape

// I.e. coordinates, categories, scores and number of outputs

for (int i = 0; i < m_outputShape.size(); ++i)

{

//size_of_output_tensor_i

const size_t size_of_output_tensor_i = m_outputShape[i];

results[i].resize(size_of_output_tensor_i);

std::memcpy(results[i].data(), result_raw + offset, sizeof(float) * size_of_output_tensor_i);

offset += size_of_output_tensor_i;

}

std::vector<ObjectDetectionRectangle> infResults;

// Number of outputs

int cnt = lround(results[3][0]);

for (int i = 0; i < cnt; i++)

{

float overall_score = results[2][i];

if (overall_score > threshold)

{

ObjectDetectionRectangle result;

result.label = m_labelsMap[results[1][i]];

result.y = results[0][i * 4];

result.x = results[0][i * 4 + 1];

result.height = results[0][i * 4 + 2];

result.width = results[0][i * 4 + 3];

result.score = overall_score;

infResults.push_back(result);

}

}

return infResults;

}

void EdgeTPUHandler::readDetectionLabels()

{

m_labelsMap.clear();

QFile inFile(m_labelPath);

if (inFile.open(QIODevice::ReadOnly | QIODevice::Text))

{

QTextStream inStream(&inFile);

while (!inStream.atEnd())

{

QString line = inStream.readLine();

QStringList split = line.split(" ", Qt::SkipEmptyParts);

if (split.count() == 2)

{

int ix = split[0].toInt();

m_labelsMap[ix] = split[1];

}

}

inFile.close();

}

}

Header File¶

#ifndef EDGETPUHANDLER_H

#define EDGETPUHANDLER_H

/******************************************************************************

* Manifest Constants, Macros

******************************************************************************/

/******************************************************************************

* Include Files

******************************************************************************/

#include <QObject>

#include <edgetpu.h>

#include <gst/gst.h>

#include <QFuture>

#include <QFutureWatcher>

#include <QtConcurrent/QtConcurrent>

#include <QMap>

#include <QList>

#include <vector>

#include <tensorflow/lite/interpreter.h>

#include <tensorflow/lite/model.h>

#include <opencv2/opencv.hpp>

/******************************************************************************

* Class Definition

******************************************************************************/

/**

* @brief The ObjectDetectionRectangle struct A struct to hold data representing a detection

*/

struct ObjectDetectionRectangle {

/**

* @brief x the detection in X as a percentage of the image

*/

float x;

/**

* @brief y the detection in y as a precentage of the image

*/

float y;

/**

* @brief width the width of the detection as a percentage of the image

*/

float width;

/**

* @brief height the height of the detection as a precetange of the image

*/

float height;

/**

* @brief label the label assigned to the detection

*/

QString label;

/**

* @brief score the score/confidence of the detetection

*/

float score;

};

/**

* @brief Responsible for creating and handling the EdgeTPU context, as well as the the detection and classification of objects

*/

class EdgeTPUHandler : public QObject

{

Q_OBJECT

public:

/**

* @brief Class constructor

* @param parent The QObject parent

*/

explicit EdgeTPUHandler(QObject *parent = nullptr);

Q_PROPERTY(int inputWidth READ inputWidth WRITE setInputWidth NOTIFY inputWidthChanged)

Q_PROPERTY(int inputHeight READ inputHeight WRITE setInputHeight NOTIFY inputHeightChanged)

/**

* @brief Gets a pointer to the singleton class

* @return A pointer to the class

*/

static EdgeTPUHandler *getSingleton();

/**

* @brief checks if the EdgeTPU is running a detection

* @return a bool with the result

*/

bool isRunningDetection() const;

/**

* @brief attempts to start a new detection

* @param frame The opencv frame to run the detection on

*/

void startDectection(cv::Mat frame);

/**

* @brief Gets the object detection candidates from the latest detection

* @return A vector with the result

*/

const std::vector<ObjectDetectionRectangle> &getCandidates();

/**

* @brief Getter for input width

* @return the value

*/

int inputWidth() const;

/**

* @brief Getter for input height

* @return the value

*/

int inputHeight() const;

signals:

/**

* @brief emits when the detection is done

*/

void detectionDone();

/**

* @brief emits when inputWidth is changed

* @param inputWidth the new inputWidth

*/

void inputWidthChanged(int inputWidth);

/**

* @brief emits when inputHeight is changed

* @param inputHeight the new inputHeight

*/

void inputHeightChanged(int inputHeight);

private slots:

/**

* @brief sets the inputWidth

* @param inputWidth the new inputWidth

*/

void setInputWidth(int inputWidth);

/**

* @brief sets the new inputHeight

* @param inputHeight the new inputHeight

*/

void setInputHeight(int inputHeight);

/**

* @brief collects the result and signals that the detection is complete

*/

void finalizeDetection();

private:

/**

* @brief Initialzes the EdgeTPU engine

*/

void initEngine();

/**

* @brief reads the detection labels from file

*/

void readDetectionLabels();

/**

* @brief wrapper method for object detection & classification

* @param frame the

* @return

*/

std::vector<ObjectDetectionRectangle> processFrame(cv::Mat &frame);

/**

* @brief performs the raw detection

* @param inputData the data to perform detection on

* @return the raw results

*/

std::vector<float> runInference(const std::vector<uint8_t> &inputData);

/**

* @brief converts the raw results into ObjectDetectionRectangles

* @param infs the raw results

* @param threshold will discard all detections below this thresold range(0-1)

* @return a vector of ObjectDetectionRectangles whinin the confidence threshold

*/

std::vector<ObjectDetectionRectangle> objectEstimateWithOutputVector(const std::vector<float> &infs, const float threshold);

const QString m_modelPath = ":/tensorflow-model.tflite"; //"/opt/tflite/ssdlite_mobiledet_coco_qat_postprocess_edgetpu.tflite";

const QString m_labelPath = ":/object_labels.txt";

QMap<int, QString> m_labelsMap;

bool m_isRunningDetection = false;

int m_wantedHeight;

int m_wantedWidth;

int m_wantedChannels;

QFutureWatcher<std::vector<ObjectDetectionRectangle>> m_watcher;

QList<size_t> m_outputShape;

std::vector<ObjectDetectionRectangle> m_candidates;

std::unique_ptr<tflite::Interpreter> m_interpreter;

std::unique_ptr<tflite::FlatBufferModel> m_model;

std::shared_ptr<edgetpu::EdgeTpuContext> m_edgeTpuContext;

int m_inputWidth;

int m_inputHeight;

QByteArray m_modelFile;

};

#endif // EDGETPUHANDLER_H

objectdetectionpainter.cpp¶

The ObjectDetectionPainter is a graphical overlay that draws rectangles on top of each detected object, the labels and the confidence level of the objects. The EdgeTPUHandler is requesting a redraw via a signal and the painter is fetching the data using through the getCandidates() call.

Implementation¶

/******************************************************************************

* Include Files

******************************************************************************/

#include "objectdetectionpainter.h"

#include "edgetpuhandler.h"

#include <QPainter>

/******************************************************************************

* Class Methods

******************************************************************************/

ObjectDetectionPainter::ObjectDetectionPainter()

{

auto edgeTpu = EdgeTPUHandler::getSingleton();

if(edgeTpu == nullptr)

{

qDebug() << "EdgeTpuHandler is null";

}

else

{

QObject::connect(edgeTpu, &EdgeTPUHandler::detectionDone, this, &ObjectDetectionPainter::triggerUpdate);

}

m_pen.setWidth(2);

m_pen.setStyle(Qt::PenStyle::DotLine);

m_pen.setColor("#fff7971c");

m_inputWidth = 0;

m_inputHeight = 0;

}

int ObjectDetectionPainter::inputWidth()

{

return m_inputWidth;

}

void ObjectDetectionPainter::setInputWidth(int width)

{

m_inputWidth = width;

}

int ObjectDetectionPainter::inputHeight()

{

return m_inputHeight;

}

void ObjectDetectionPainter::setInputHeight(int height)

{

m_inputHeight = height;

}

void ObjectDetectionPainter::triggerUpdate()

{

update();

}

void ObjectDetectionPainter::paint(QPainter *painter)

{

auto edgeTpu = EdgeTPUHandler::getSingleton();

if(edgeTpu == nullptr){

return;

}

auto rects = edgeTpu->getCandidates();

painter->setPen(m_pen);

float clientWidth = width();

float clientHeight = height();

int offsetX = 0;

int offsetY = 0;

float scaleX = 1.0;

float scaleY = 1.0;

float aspectRatioCamera = 1.0;

float aspectRatioClient = 1.0;

// Calculate aspect ratio of the camera input

if (m_inputHeight > 0)

{

aspectRatioCamera = (float)m_inputWidth / m_inputHeight;

}

// Calculate aspect ratio of the client area

if (clientHeight > 0)

{

aspectRatioClient = (float)clientWidth / clientHeight;

}

if (aspectRatioClient > 1.0)

{

// "Normal/Landscape" case, client width is greater than the client height

scaleX = aspectRatioCamera / aspectRatioClient;

// In case there are empty spaces on the sides of the view,

// the offsetX will move the object detection points accordingly

offsetX = (clientWidth - clientHeight * aspectRatioCamera) / 2;

}

else

{

// "Portrait" case, client height is greater than the client width

scaleY = aspectRatioClient / aspectRatioCamera;

// In case there are empty spaces above and below the view,

// the offsetY will move the object detection points accordingly

offsetY = (clientHeight - clientWidth / aspectRatioCamera) / 2;

}

for (auto &candidate : rects)

{

// we need to scale it according to display width and aspect ratio

float x = candidate.x * clientWidth * scaleX + offsetX;

float y = candidate.y * clientHeight * scaleY + offsetY;

// Top left point

QPoint point1(static_cast<int>(x), static_cast<int>(y));

// we need to scale it according to display width and aspect ratio

float w = candidate.width * clientWidth * scaleX + offsetX;

float h = candidate.height * clientHeight * scaleY + offsetY;

// Bottom right point

QPoint point2(static_cast<int>(w), static_cast<int>(h));

QRect qrect = QRect(point1, point2);

painter->drawRect(qrect);

painter->drawText(point1, candidate.label);

}

}

Header File¶

#ifndef OBJECTDETECTIONPAINTER_H

#define OBJECTDETECTIONPAINTER_H

/******************************************************************************

* Include Files

******************************************************************************/

#include <QQuickPaintedItem>

#include <QPen>

/******************************************************************************

* Manifest Constants, Macros

******************************************************************************/

/******************************************************************************

* Class Definition

******************************************************************************/

/**

* @brief Draws rectangles and labels on each detection candidate

*/

class ObjectDetectionPainter : public QQuickPaintedItem

{

Q_OBJECT

/**

* @brief Property for camera input width

*/

Q_PROPERTY(int inputWidth READ inputWidth WRITE setInputWidth)

/**

* @brief Property for camera input height

*/

Q_PROPERTY(int inputHeight READ inputHeight WRITE setInputHeight)

public:

/**

* @brief Class contrstructor

*/

explicit ObjectDetectionPainter();

/**

* @brief Gets called whenever the item needs to be repainted

* @param painter pointer to the painter object

*/

void paint(QPainter *painter);

public slots:

/**

* @brief Schedules a repaint of all candidates

*/

void triggerUpdate();

private:

int inputWidth();

void setInputWidth(int width);

int inputHeight();

void setInputHeight(int height);

QPen m_pen;

int m_inputWidth;

int m_inputHeight;

};

#endif // OBJECTDETECTIONPAINTER_H

gstplayerjob.cpp¶

The GstPlayerJob is a class that handles the replay of the GStreamer pipeline in a separate thread.

Implementation¶

/******************************************************************************

* Include Files

******************************************************************************/

#include "gstplayerjob.h"

/******************************************************************************

* Class Methods

******************************************************************************/

GstPlayerJob::GstPlayerJob(GstElement *pipeline)

{

m_pipeline = pipeline ? static_cast<GstElement *>(gst_object_ref(pipeline)) : NULL;

}

GstPlayerJob::~GstPlayerJob()

{

if(m_pipeline)

gst_object_unref(m_pipeline);

}

void GstPlayerJob::run()

{

if(m_pipeline)

gst_element_set_state(m_pipeline, GST_STATE_PLAYING);

}

Header File¶

#ifndef GSTPLAYERJOB_H

#define GSTPLAYERJOB_H

/******************************************************************************

* Include Files

******************************************************************************/

#include <QRunnable>

#include <gst/gst.h>

/******************************************************************************

* Manifest Constants, Macros

******************************************************************************/

/******************************************************************************

* Class Definition

******************************************************************************/

/**

* @brief Container class for running a gstreamer pipeline as QRunnable

*/

class GstPlayerJob : public QRunnable

{

public:

/**

* @brief GstPlayerJob creates the GstPlayer job

* @param pipeline the gstreamer pipeline to play

*/

GstPlayerJob(GstElement* pipeline);

virtual ~GstPlayerJob();

/**

* @brief run starts the GstPlayer job, which sets the pipeline to playing

*/

void run();

private:

GstElement* m_pipeline;

};

#endif // GSTPLAYERJOB_H

pipeline.cpp¶

In the Pipeline class, the GStreamer pipeline is setup element by element. You may need to adapt the pipeline to the specification of your camera or if you want to use another input source.

Implementation¶

/******************************************************************************

* Include Files

******************************************************************************/

#include "pipeline.h"

#include <QDebug>

/******************************************************************************

* Class Methods

******************************************************************************/

Pipeline::Pipeline(QObject *parent) : QObject(parent)

{

}

Pipeline::~Pipeline()

{

if(m_pipeline){

gst_element_set_state(m_pipeline, GST_STATE_NULL);

gst_object_unref(m_pipeline);

}

}

Pipeline *Pipeline::createPipeline(std::string pipelineName, const int identifier, const int portNumber)

{

std::string id = QString::number(identifier).toStdString();

std::string udpsrcName = "udpsrc" + id;

std::string filtersName = "capsfilter" + id;

std::string rtpjpegdepayName = "rtpjpegdepay" + id;

std::string jpegparseName = "jpegparse" + id;

std::string jpegdecName = "jpegdec" + id;

std::string queueName = "queue" + id;

std::string videoconvertName = "videoconvert" + id;

std::string gluploadName = "glupload" + id;

std::string sinkName = "qmlglsink" + id;

GstElement *pipeline = gst_pipeline_new(pipelineName.c_str());

if (!pipeline) {

qCritical() << "Pipeline element could not be created. Exiting.";

return nullptr;

}

//src that receives data from a udp socket

GstElement *udpsrc =

gst_element_factory_make("udpsrc", udpsrcName.c_str());

//Use a capsfilter to pass data between elements, without modifying the data

GstElement *filters =

gst_element_factory_make("capsfilter", filtersName.c_str());

// Unpack jpeg data from the rtp packages

GstElement *rtpjpegdepay =

gst_element_factory_make("rtpjpegdepay", rtpjpegdepayName.c_str());

// A data queue

GstElement *queue = gst_element_factory_make("queue", queueName.c_str());

// Parsing of the jpeg data into single frame buffers to be used by gstreamer

GstElement *jpegparse =

gst_element_factory_make("jpegparse", jpegparseName.c_str());

// Decode images from jpeg format

GstElement *jpegdec =

gst_element_factory_make("jpegdec", jpegdecName.c_str());

// Colorspace conversion

GstElement *videoConvert=

gst_element_factory_make("videoconvert", videoconvertName.c_str());

//Upload data into openGL

GstElement *glupload =

gst_element_factory_make("glupload", gluploadName.c_str());

//Video sink that renders into a qml item

GstElement *sink =

gst_element_factory_make("qmlglsink", sinkName.c_str());

// Set sync to false to increase performance <------- IMPORTANT!!!

g_object_set(G_OBJECT(sink), "sync", FALSE, NULL);

// Check that all elements were created successfully

if (!udpsrc || !filters || !rtpjpegdepay || !jpegparse || !jpegdec ||

!queue || !glupload || !sink) {

qCritical() << "One element could not be created. Exiting.";

return nullptr;

}

// Set a specific port number to the UdpSrc.

g_object_set(G_OBJECT(udpsrc), "port", portNumber, NULL);

// Setting filter caps.

g_object_set(G_OBJECT(filters), "caps",

gst_caps_new_simple("application/x-rtp", "payload", G_TYPE_INT,

26, "encoding", G_TYPE_STRING, "JPEG", NULL), NULL);

// Add all elements into the pipeline

gst_bin_add_many(GST_BIN(pipeline), udpsrc, filters, rtpjpegdepay, queue,

jpegparse, jpegdec, videoConvert , glupload, sink, NULL);

//Link all the elements

gst_element_link(udpsrc, filters);

gst_element_link(filters, rtpjpegdepay);

gst_element_link(rtpjpegdepay, jpegparse);

gst_element_link(jpegparse, jpegdec);

gst_element_link(jpegdec, videoConvert);

gst_element_link(videoConvert, glupload);

gst_element_link(glupload, sink);

// Create the pipeline class and return a ptr to it

Pipeline* p = new Pipeline();

p->m_videoPad = gst_element_get_static_pad (videoConvert, "sink");

p->m_pipeline = pipeline;

p->m_sink = sink;

return p;

}

void Pipeline::setProbeCallback(GstPadProbeCallback callback)

{

if(m_videoPad)

gst_pad_add_probe (m_videoPad, GST_PAD_PROBE_TYPE_BUFFER, (GstPadProbeCallback)callback, NULL, NULL);

}

GstElement *Pipeline::getGstPipeline() const

{

return m_pipeline;

}

GstElement *Pipeline::getSink() const

{

return m_sink;

}

Header File¶

#ifndef PIPELINE_H

#define PIPELINE_H

/******************************************************************************

* Include Files

******************************************************************************/

#include <QObject>

#include <gst/gst.h>

/******************************************************************************

* Manifest Constants, Macros

******************************************************************************/

/******************************************************************************

* Class Definition

******************************************************************************/

/**

* @brief A class that encapsulates the gst-functionality to a single class

*/

class Pipeline : public QObject

{

Q_OBJECT

public:

/**

* @brief The destructor

*/

virtual ~Pipeline();

/**

* @brief Creates the gst-pipeline via factory method

* @param pipelineName the name of the pipeline

* @param identifier identifier id

* @param portNumber the portnumber the pipeline listens to

* @return a ptr to the pipeline holder class

*/

static Pipeline *createPipeline(std::string pipelineName, const int identifier = 0, const int portNumber = 50004);

/**

* @brief Sets a probe callback function to the pad of the videoconvert element

* @param callback The callback function

*/

void setProbeCallback(GstPadProbeCallback callback);

/**

* @brief Gets GstElement pointer to the gst-pipeline

* @return The pointer to the pipeline

*/

GstElement* getGstPipeline() const;

/**

* @brief Gets GstElement pointer to the videosink

* @return The pointer to the videosink

*/

GstElement* getSink() const;

private:

/**

* @brief The constructor

* @param parent The QObject parent

*/

Pipeline(QObject* parent = nullptr);

GstElement* m_pipeline;

GstElement* m_sink;

GstPad* m_videoPad;

};

#endif // PIPELINE_H

main.qml¶

The main QML file is a simple view containing the GstGLVideoItem that displays the video stream and the ObjectDetectionPainter that draws the detection result on top of it.

import QtQuick 2.15

import QtQuick.Window 2.15

import org.freedesktop.gstreamer.GLVideoItem 1.0

import CrossControl 1.0

Window {

width: 640

height: 480

visible: true

title: qsTr("Tensorflow-Recipe")

// The Ethernet camera stream window

GstGLVideoItem {

id: video

objectName: "videoItem"

anchors.fill: parent

}

// Renders all the detection rectangles and labels

ObjectDetectionPainter {

anchors.fill: parent

// Adjust according to camera input size

inputWidth: edgeTPUHandler.inputWidth

inputHeight: edgeTPUHandler.inputHeight

}

}